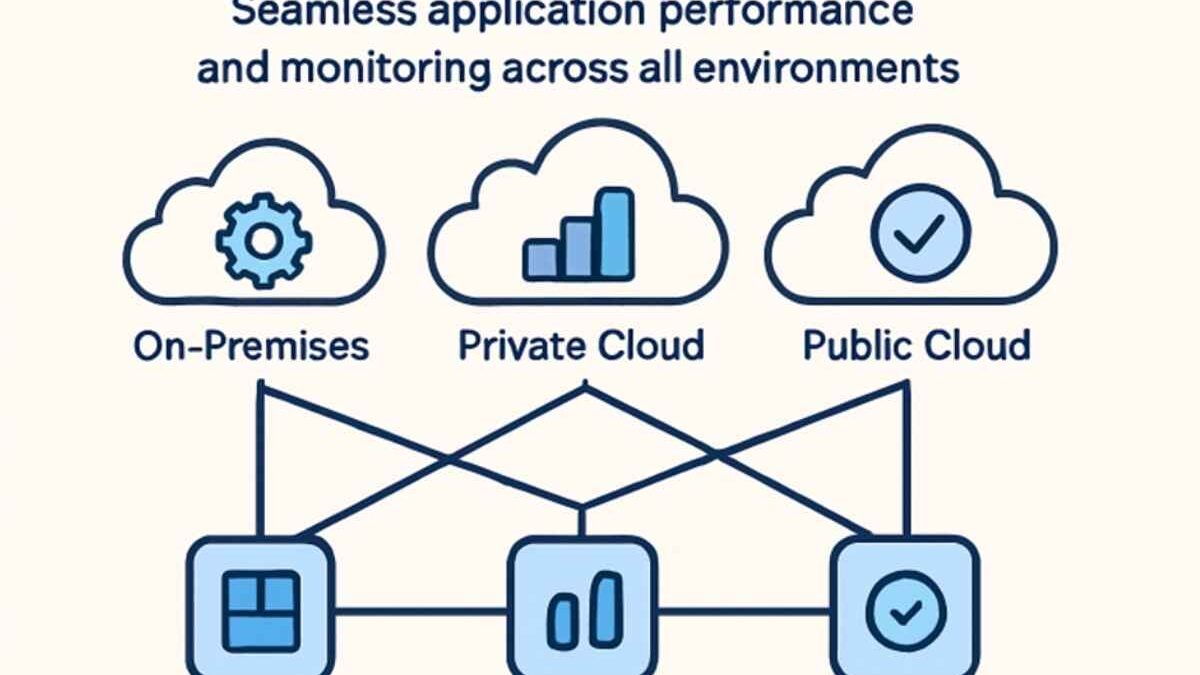

Today’s digital businesses rely heavily on applications that need to work smoothly across multiple platforms—on-premises data centers, private clouds, and public cloud providers. This setup is key to delivering fast, reliable, and seamless digital experiences to users worldwide.

To make that happen, companies must focus on application performance optimization no matter where their workloads live. The most effective way to do this is through comprehensive application performance monitoring. It helps teams spot and fix problems before they affect users—reducing downtime and improving customer satisfaction.

Table of Contents

Why Modern Application Performance Matters

With the rapid growth of IT systems, organizations now face a mix of old on-premises setups and new cloud services. Managing all that while keeping performance consistent can be challenging.

To handle this complexity, companies are adopting newer strategies—like cloud-native frameworks, Infrastructure as Code, multi-cloud models, and edge computing. These tools help ensure scalability, reliability, and top-notch performance across every environment.

Leveraging Cloud-Native Technologies

Cloud-native technologies have changed the way we build and manage apps. Tools like microservices, containers, and Kubernetes allow businesses to split large applications into smaller, independent parts.

Key Benefits

- Scalability: Apps can scale up or down automatically based on demand.

- Resilience: If one component fails, the rest keep running.

- Faster Deployments: Automation and CI/CD pipelines make updates faster and reduce errors.

This flexibility helps businesses launch features quickly and expand globally without worrying about downtime.

Implementing Infrastructure as Code (IaC)

Infrastructure as Code (IaC) automates how you build and manage IT infrastructure. Instead of manual setup, everything—servers, storage, and networks—is defined through code.

Why It Matters

- Consistency: Uniform environments mean fewer bugs.

- Speed: Automated deployments save time.

- Efficiency: Reduces manual errors and simplifies rollbacks.

IaC bridges the gap between development and operations teams, helping them deliver updates faster and more reliably.

Adopting a Multi-Cloud Strategy

Many companies are shifting toward multi-cloud environments—using multiple providers to balance cost, performance, and flexibility.

Strategic Benefits

- Resilience: Avoids over-reliance on one vendor.

- Cost Optimization: Lets teams choose the best-value platform for each workload.

- Flexibility: Access to region-specific services and better compliance with data laws.

To succeed, organizations need consistent governance, secure connections, and strong multi-cloud performance monitoring tools.

Continuous Monitoring and Analytics

With applications spread across various platforms, real-time monitoring has become essential. Tracking metrics like latency, error rates, and throughput helps prevent downtime.

Best Practices

- Monitor key performance indicators (KPIs) for all core services.

- Set up automated alerts to catch issues early.

- Use analytics to find performance trends and optimize resources.

Strong monitoring tools ensure reliability and a great user experience across all systems.

Integrating Edge Computing

As data grows and speed becomes more critical, edge computing is transforming performance management. It processes data closer to its source—like IoT devices or remote sensors—reducing lag and improving efficiency.

Benefits of Edge Computing

- Reduced Latency: Real-time decisions with minimal delay.

- Bandwidth Optimization: Less data sent to central servers, saving costs.

- Higher Reliability: Keeps apps running even with weak internet connections.

When combined with cloud systems, edge computing enables faster, smarter, and more resilient digital operations.

Final Thoughts

To stay competitive in today’s digital landscape, businesses must take a comprehensive approach to application performance optimization. By embracing cloud-native solutions, Infrastructure as Code, multi-cloud strategies, continuous monitoring, and edge computing, organizations can boost performance, reliability, and innovation—no matter where their applications run.